Rivero L.Encyclopedia of database technologies and applications.2006

.pdf110

Data Model Versioning and Database Evolution

Hassina Bounif

Swiss Federal Institute of Technology, Switzerland

INTRODUCTION

In the field of computer science, we are currently facing a major problem designing models that evolve over time. This holds in particular for the case of databases: Their data models need to evolve, but their evolution is difficult. User requirements are now changing much faster than before for several reasons, among them the changing perception of the real world and the development of new technologies. Databases show little flexibility in terms of supporting changes in the organization of their schemas and data. Database evolution approaches maintain current populated data and software application functionalities when changing database schema. Data model versioning is one of these chosen approaches used to resolve the evolution of conventional and nonconventional databases such as temporal and spatialtemporal databases. This article provides some background on database evolution and versioning technique fields. It presents the unresolved issues as well.

BACKGROUND

A major problem in computer science is how to elaborate designs that can evolve over time in order to avoid having to undergo redesign from scratch. Database designs are a typical example where evolution is both necessary and difficult to perform. Changes to database schema have to be enabled while maintaining the currently stored data and the software applications using the data in the database. One of the existing approaches used to resolve difficulties in database evolution is data model versioning. These techniques are the main focus of this article.

To avoid misinterpretation, we first recall the definition of the meaning of the basic concepts: schema versioning, schema evolution, schema modification, and schema integration. These concepts are all linked to the database evolution domain at different levels, but according to most of the research focusing on database evolution (Roddick, 1995), there is a consistent distinction between them.

•Schema Versioning means either creating from the initial maintained database schema or deriving from

the current maintained version different schemas called versions, which allow the querying of all data associated with all the versions as well as the initial schema according to user or application preferences. Partial schema versioning allows data updates through references to the current schema, whereas full schema versioning allows viewing and update of all present data through user-definable version interfaces (Roddick, 1995).

•Schema Evolution means modifying a schema within a populated database without loss of existing data. When carrying out an evolution operation, there are two problems that should be considered: on one hand, the semantics of change, i.e., their effects on the schema; and, on the other hand, change propagation, which means the propagation of schema changes to the underlying existing instances (Peters & Özsu, 1997). Schema evolution is considered a particular case of schema versioning in which only the last version is retained (Roddick, 1995).

•Schema Modification means making changes in the schema of a populated database: The old schema and its corresponding data are replaced by the new schema and its new data. This may lead to loss of information (Roddick, 1995).

•Schema Integration means combining or adding schemas of existing or proposed databases with or to a global unified schema that merges their structural and functional properties. Schema integration occurs in two contexts: (1) view integration for one database schema and (2) database integration in distributed database management, where a global schema represents a virtual view of all database schemas taken from distributed database environments. This environment could be homogenous, i.e., dealing with schemas of databases having the same data model and identical DBMSs (database management systems), or heterogeneous, which deals with a variety of data models and DBMSs. This second environment leads to what is called federated database schemas. Some research works consider schema integration a particular case of schema evolution in which the integration of two or more schemas takes place by choosing one and applying the facts in the others (Roddick, 1995).

Copyright © 2006, Idea Group Inc., distributing in print or electronic forms without written permission of IGI is prohibited.

TEAM LinG

Data Model Versioning and Database Evolution

This article is organized as follows. The next section reviews some background related to database evolution and focuses on the versioning data model. In the Future Trends section, we present some emerging trends. The final section gives the conclusion and presents some research directions.

MAIN THRUST

Versioning Methods and Data

Conversion Mechanisms

Various mechanisms are used to manage versioning/ evolution of databases according to the data model, the nature of the field of the applications, as well as the priorities adopted (Munch, 1995).

•Revisions (Sequential): consists of making each new version a modification of the most recent one. At the end, the versions sequentially form a single linked list called a revision chain (Munch, 1995). Each version in the chain represents an evolution of the previous one. This method is used for versioning schemas. This technique is not very effective because many copies of the database are created as schema versions. It is adopted by the Orion System (Kim & Chou, 1988), in which complete database schemas are created and versioned.

•Variants (Parallel): means changing the relationships for revision from one-to-one to many-to-one, so that many versions may have the same relationship with a common “ancestor” (Munch, 1995). A variant does not replace another, as a revision does, but is instead an alternative to the current version. This versioning method is adapted differently from one database to another depending on the unit (a schema, a type, or a view) to be versioned. For instance, the Encore System (Skarra & Zdonik, 1987) uses type versioning while the Frandole2 system (Andany, Leonard, & Palisser, 1991) uses view versioning. Revisions and variants are usually combined into a common structure named the version graph.

•Merging: consists of combining two or more variants into one (Munch, 1995).

•Change Sets: In this method, instead of having each object individually versioned, the user collects a set of changes to any number of objects and registers them as one unit of change to the database. This collection is termed a change set, or cset.

•Attribution: Used to distinguish versions via val-

ues of certain attributes, for example, a date or a |

D |

status. |

|

|

|

Other authors (Awais, 2003; Connolly & Begg, 2002) |

|

further categorize versions. A version can be transient, |

|

working, or released. |

|

•Transient versions: A transient version is considered unstable and can be updated or deleted. It can also be created from scratch by checking out a released version from a public database or by deriving it from a working or transient version in a private database. In the latter case, the base transient version is promoted to a working version. Transient versions are stored in the creator’s private workspace (Connolly & Begg, 2002).

•Working versions: A working version is considered stable and cannot be updated, but it can be deleted by its creator. It is stored in the creator’s private workspace (Connolly & Begg, 2002).

•Released versions: A released version is considered stable and cannot be updated or deleted. It is stored in a public database by checking in a working version from a private database (Connolly & Begg, 2002).

With respect to data, there are three main data conversion mechanisms that are employed with these versioning methods: (1) Data is simply coerced into the new format;

(2) Data is converted by lazy mechanisms when required; in this mechanism, there is no need to consider unneeded data, and schema changes take place rapidly when change occurs; and (3) Conversion interfaces are used to access data that never undergo physical conversion (Roddick, 1995).

Versioning and Data Models

The unit to be versioned must have (1) a unique and immutable identity and (2) an internal structure. These two criteria have some consequences on the possibilities of versioning within different data models. Object-ori- ented or ER-based data models clearly fulfill these two requirements (Munch, 1995). The adoption of an objectoriented data model is the most common choice cited in the literature (Grandi & Mandreoli, 2003) on schema evolution through schema versioning. The relational model has also been studied extensively. In fact, some problems exist. Tuples, for instance, are simply values which fulfill the requirements for non-atomicity, but they have no useful ID. A user-defined key cannot be used since the

111

TEAM LinG

tuple may be updated and could suddenly appear as another. A complete relation table can, of course, be treated as an object with an ID, but this corresponds more to a type than to a normal object. Solutions for extended relational models have been proposed, such as the RM/T model, which introduces system-defined, unique, and immutable keys.

The management of versions is done with either schema versions or object versions.

•Schema Versions: A version of a schema consists of a triplet such as <S, N, v>, where <S, N> are an identification of the schema (S) and the version value

(N). The value of the schema version, v, is a set of classes connected by inheritance relationships and references relationships such as composition.

•Object Versions: An object is associated with a set of versions. Under schema versions, associated objects belong only to one class. Representation and behavior of an object under a class constitute an object version under this class. An object version is a triplet such as <O, N, v>, in which O is an object identifier, N, an integer, and v, the value of the version of an object. Object versions are identified by the couple <O, N>. Generally, in models, an object has at least one version under a class; N is that of the corresponding class. Value v is defined in the type of the associated class. Three operations associated with the concept of object version are: creation of the first object version, deletion of object version, and derivation of a new object version.

Transparency of Versioning

The management of schema versions or object versions is clear to the user, who does not have to constantly worry about the possible occurrence of versions. In order to gain non-versioned access to data stored in a versioned database, there are several alternative solutions (Munch, 1995).

1.Data is checked out to a workspace, where applications can access them freely, and then checked in to the database when they are no longer being used. This technique is most often used when dealing with files.

2.A layer is built on top of the versioned database which provides a standard interface and hides the details of the database, as well as the versioning. This technique is useful also for non-versioned databases.

3.Version selection is not part of the normal access functions of the database. Versioning is set up at the start and possibly the end of a change or a long transaction.

Data Model Versioning and Database Evolution

There are two main version storage strategies, which are:

•Versioning-by-copy: A new version of an object is implemented by making a copy of the object, assigning the copy a version number, and updating references to its predecessors. This technique consumes more disk space but provides a form of backup (Awais, 2003).

•Versioning-by-difference: It is also known as deltaversioning and requires that the differences between the new version and its predecessors be kept. Although this technique saves the disk space, it increases overheads since the DBMS needs to rebuild the particular version each time it has to be accessed (Awais, 2003).

Versioned Database Functionality

The most fundamental operations that a DBMS has to provide to allow the versioning process are (Munch, 1995):

•creating new versions;

•accessing specific versions through a version selection mechanism;

•adding user-defined names or identifiers to versions;

•deleting versions (some restrictions are made on which versions are allowed to be deleted, if any at all);

•maintaining special relationships between versions, e.g., “revision_of” or “variant_of”;

•changing existing versions if possible;

•“freezing” certain versions so that they cannot be changed, if change is possible;

•merging variants, automatically and/ or manually;

•attaching status values or other attributes to versions.

Example of a Database Management System with Versioning

Orion (Kim & Chou, 1988) supports schema versions. It introduces invariants and rules to describe the schema evolution in OODBs (object-oriented databases). Orion defines five invariants and 12 rules for maintaining the invariants. The schema changes allowed are classified into three categories:

•Changes to the contents of a class;

•Changes to the inheritance relationship between classes;

112

TEAM LinG

Data Model Versioning and Database Evolution

•Changes to the class as a whole (adding and dropping entire classes)

In the following, some of these changes are described.

1.Add an attribute V to a class C: If V causes a name conflict with an inherited attribute, V will override the inherited attribute. If the old attribute was locally defined in C, it is replaced by the new definition. Existing instances to which V is added receive the null value.

2.Drop an attribute V from a class C and subclasses of C that inherited it. Existing instances of these classes lose their values for V. If C or any of its subclasses has other superclasses that have attributes of the same name then it inherits one of them.

3.Make a class S a superclass of a class C: The addition of a new edge from S to C must not introduce a cycle in the class lattice. C and its subclasses inherit attributes and methods from S.

4.Add a new class C: All attributes and methods from all superclasses specified for C are inherited unless there are conflicts. If no superclasses of C are specified, the system-defined root of the class lattice (in this case, CLASS) becomes the superclass of C.

5.Drop an existing class C: All edges from C to its subclasses are dropped. This means that the subclasses will lose all the attributes and methods they inherited from C. Then all edges from the superclasses of C to C are removed. Finally, the definition of C is dropped and C is removed from the lattice. Note that the subclasses of C continue to exist.

These are some rules for versioning of schemas.

•Schema-Version Capability Rule: A schema version may either be transient or working. A transient version may be updated or deleted, and it may be promoted to a working schema version at any time. A working schema version, on the other hand, cannot be updated. Further, a working schema version can be demoted to a transient schema version if it has no child schema version.

•Direct-Access-Scope Update Rule: The access scope of a schema version is non-updatable under that version if any schema version has been derived from it unless each of the derived schemas has blocked the inheritance from its parent or alternatively has left the scope of its parent.

FUTURE TRENDS

D

Current research on the evolution of information systems and particularly on versioning databases is investigating ways of refining the versioning model by proposing new combined versioning approaches that aim to reduce the number of schema versions to a minimum in order to reduce the database access time and the database space consumption. An example can be seen in the framework based on the semantic approach that defines reasoning tasks in the field of description logic for evolving database schemas (Franconi, Grandi, & Mandreoli, 2000; Grandi & Mandreoli, 2003). Researchers are also concentrating on other new domains that seem more promising and similar to their research domain, such as:

Evolution Based on Ontology

Approach

Ontology evolution is not similar to database evolution even though many issues related to ontology evolution are exactly the same as those in schema evolution (Noy & Klein, 2002). For example, database researchers distinguish between schema evolution and schema versioning, while, for ontologies, there is no distinction between these two concepts. However, ontologies are used to resolve database evolution issues, as in works that present ontology of change (Lammari, Akoka, & Comyn-Wattiau, 2003). This ontology is a semantic network that classifies an evolution or a change into one or more categories depending on the significance of the change, its semantics, and the information and application dependency.

CONCLUSION

Although the subject of database evolution has been extensively addressed in the past, for relational and ob- ject-oriented data models in temporal and other variants of databases, a variety of techniques has been proposed to execute change in the smoothest way to maintain data and application functionalities. Many research works have also placed their focus on conceptual schema evolution independent of any logical data model orientation, and their investigation has concentrated on schema stability, schema quality, schema changes, and schema semantics (Gómez&Olivé,2002,2003).Anexampleisthecase of the work developed in Wedemeijer (2002). It proposes a framework consisting of a set of metrics that control the stability of conceptual schemas after evolution. Despite this, database evolution still remains a hot research subject.

113

TEAM LinG

Data model versioning is one of the solutions approved, and the versioning principles can be applied universally to many different forms of data, such as text files, database objects and relationships, and so on, despite certain negative points that lead us to consider other possible improvements. For example, the revision versioning approach generates high costs and requires much memory space as the database evolves, which will be difficult to support in the long term. Current works on database evolution prefer developing approaches that combine versioning with other approaches like the modi- fication-versioning approach or versioning-view approach.

Concerning versioning perspectives, despite the many unresolved issues, such as refining the versioning model or speed of access to data through the different versions, versioning is gaining ground each day, and the work in schema versioning is extending. It includes both multitemporal and spatial databases (Grandi & Mandreoli, 2003; Roddick, Grandi, Mandreoli, & Scalas, 2001).

REFERENCES

Andany, J., Leonard, M., Palisser, C. (1991). Management of schema evolution in databases. In Proceedings of the 17th International Conference on Very Large Databases, San Mateo, CA. Morgan Kaufmann.

Awais, R. (2003). Aspect-oriented database systems. Berlin, Heidelberg, Germany: Springer-Verlag.

Connolly, T., & Begg, C. (2002). Database systems: A practical approach to design, implementation, and management (3rd ed.). Harlow: Addison-Wesley.

Franconi, E., Grandi, F., & Mandreoli, F. (2000, September). Schema evolution and versioning: A logical and computational characterisation. Proceedings of the Ninth International Workshop on Foundations of Models and Languages for Data and Objects, FoMLaDO/ DEMM2000, Lecture Notes in Comptuer Science 2348, Dagstuhl Castle, Germany. Springer.

Gómez, C., & Olivé, A. (2002). Evolving partitions in conceptual schemas in the UML. CaiSE 2002, Lecture Notes in Computer Science 2348, Toronto, Canada. Springer.

Gómez, C., & Olivé, A. (2003). Evolving derived entity types in conceptual schemas in the UML. In Ninth International Conference, OOIS 2003, Lecture Notes in Computer Science, Geneva, Switzerland (pp. 3-45). Springer.

Data Model Versioning and Database Evolution

Grandi, F., & Mandreoli, F. (2003). A formal model for temporal schema versioning in object-oriented databases.

Data and Knowledge Engineering, 46(2), 123-167.

Kim, W., & Chou, H.-T. (1988). Versions of schema for object-oriented databases. Proceedings VLDB’88, Los Angelos, CA. Morgan Kaufmann.

Lammari, N., Akoka, J., & Comyn-Wattiau, I. (2003). Supporting database evolution: Using ontologies matching. In Ninth International Conference on Object-Oriented Information Systems, Lecture notes in Computer Science 2817, Geneva, Switzerland. Springer.

Munch, B. P. (1995). Versioning in a software engineering database—The change oriented way. Unpublished doctoral thesis,Norwegian Institute of Technology (NTNU), Trondheim, Norway.

Noy, N. F., & Klein, M. (2002). Ontology evolution: Not the same as schema evolution (Tech. Rep. No. SMI-2002- 0926). London: Springer.

Peters, R. J., & Özsu, M. T. (1997). An axiomatic model of dynamic schema evolution in object-based systems. ACM TODS, 22(1), 75-114.

Roddick, J. F. (1995). A survey of schema versioning issues for database systems. Information and Software Technology, 37(7), 383-393.

Roddick, J. F., Grandi, F., Mandreoli, F., & Scalas, M. R. (2001). Beyond schema versioning: A flexible model for spatio-temporal schema selection. Geoinformatica, 5(1), 33-50.

Skarra, A. H., & Zdonik, S. B. (1987). Type evolution in an object-oriented database. In B. Shriver & P. Wegner (Eds.), Research directions in OO programming. USA: MIT Press.

Wedemeijer, L.(2002). Defining metrics for conceptual schema evolution. In Ninth International Workshop on Foundations of Models and Languages for Data and Objects, FoMLaDO/DEMM2000, Lecture Notes in Computer Science 2065, Dagstuhl Castle, Germany. Springer.

KEY TERMS

Data Model: Defines which information is to be stored in a database and how it is organized.

Federated Database Schemas: (FDBS) Collection of autonomous cooperating database systems working in either a homogenous environment, i.e., dealing with

114

TEAM LinG

Data Model Versioning and Database Evolution

schemas of databases having the same data model and identical DBMSs (database management systems), or a heterogeneous environment.

Schema Evolution: Ability of the database schema to change over time without the loss of existing information.

Schema Integration: Ability of the database schema to be created from the merging of several other schemas.

Schema Modification: Ability to make changes in the database schema. The data corresponding to the past schema are lost or recreated according the new schema.

Schema Versioning: Ability of the database schema

to be replaced by a new version created with one of the D several existing versioning methods.

Temporal Database: Ability of the database to handle a number of time dimensions, such as valid time (the time line of perception of the real world), transaction time (the time the data is stored in the database), and schema time (the time that indicates the format of the data according to the active schema at that time).

Versioning-View and Versioning-Modification: Two combined versioning approaches that aim to create a minimum number of schema versions to reduce database access time and database space consumption.

115

TEAM LinG

116

Data Quality Assessment

Juliusz L. Kulikowski

Institute of Biocybernetics and Biomedical Engineering PAS, Poland

INTRODUCTION

For many years the idea that for high information processing systems effectiveness, high quality of data is not less important than the systems’ technological perfection was not widely understood and accepted. The way to understanding the complexity of the data quality notion was also long, as will be shown in this paper. However, progress in modern information processing systems development is not possible without improvement of data quality assessment and control methods. Data quality is closely connected both with data form and value of information carried by the data. High-quality data can be understood as data having an appropriate form and containing valuable information. Therefore, at least two aspects of data are reflected in this notion: (1) technical facility of data processing and (2) usefulness of information supplied by the data in education, science, decision making, etc.

BACKGROUND

In the early years of information theory development a difference between the quantity and the value of information was noticed; however, originally little attention was paid to the information value problem. Hartley (1928), interpreting information value as its psychological aspect, stated that it is desirable to eliminate any additional psychological factors and to establish an information measure based on purely physical terms only. Shannon and Weaver (1949) created a mathematical communication theory based on statistical concepts, fully neglecting the information value aspects. Brillouin (1956) tried to establish a relationship between the quantity and the value of information, stating that for an information user, the relative information value is smaller than or equal to the absolute information (i.e., to its quantity, chap. 20.6). Bongard (1960) and Kharkevitsch (1960) have proposed to combine the information value concept with the one of a statistical decision risk. This concept has also been developed by Stratonovitsch (1975, chaps. 9-10). This approach leads to an economic point of view on information value as profits earned due to information using (Beynon-Davies, 1998, chap. 34.5). Such an approach to information value assessment is limited to the cases in

which economic profits can be quantitatively evaluated. In physical and technical measurements, data accuracy (described by a mean square error or by a confidence interval length) is used as the main data quality descriptor (Piotrowski, 1992). In medical diagnosis, data actuality, relevance and credibility play a relatively higher role than data accuracy (Wulff, 1981, chap. 2). This indicates that, in general, no universal set of data quality descriptors exists; they rather should be chosen according to the application area specificity. In the last years data quality became one of the main problems posed by World Wide Web (WWW) development (Baeza-Yates & Ribeiro-Neto, 1999, chap. 13.2). The focus in the domain of finding information in the WWW increasingly shifts from merely locating relevant information to differentiating high-qual- ity from low-quality information (Oberweis & Perc, 2000, pp. 14-15). In the recommendations for databases of the Committee for Data in Science and Technology (CODATA), several different quality types of data are distinguished: (1) primary (rough) data, whose quality is subjected to individually or locally accepted rules or constraints, (2) qualified data, broadly accessible and satisfying national or international (ISO) standards in the given application domain, and (3) recommended data, the highest quality broadly accessible data (like physical fundamental constants) that have passed a set of special data quality tests (Mohr & Taylor, 2000, p. 137).

BASIC PROBLEMS OF DATA QUALITY ASSESSMENT

Taking into account the difficulty of data value representation by a single numerical parameter Kulikowski (1969) proposed to characterize it by a vector whose components describe various easily evaluated data quality aspects (pp. 89-105). For a multi-aspect data quality evaluation, quality factors like data actuality, relevance, credibility, accuracy, operability, etc. were proposed. In the ensuing years the list of proposed data quality factors by other authors has been extended up to almost 200 (Pipino, Lee, & Wang, 2002; Shanks & Darke, 1998; Wang & Storey, 1995; Wang Strong, & Firth, 1996). However, in order to make data quality assessment possible it is not enough to define a large number of sophisticated data quality factors. It is also necessary to establish the meth-

Copyright © 2006, Idea Group Inc., distributing in print or electronic forms without written permission of IGI is prohibited.

TEAM LinG

Data Quality Assessment

ods of multi-aspect data quality comparison. For example, there may arise a problem of practical importance—What kind of data in a given situation should be preferred: the more fresh but less credible, or outdated but very accurate ones. A comparison of multi-aspect data qualities is possible when the following formal conditions are fulfilled: (1) The quality factors are represented by nonnegative real numbers, (2) if taken together, they form a vector in a linear semi-ordered vector space, and (3) they are defined so that the multi-aspect quality vector is a nondecreasing function of its components (quality factors; Kulikowski, 1986). For example, if t0 denotes the time of data creation and t is a current time, then for t0 ≤ t neither the difference t0 – t (as not satisfying the first condition) nor t – t0 (as not satisfying the third condition) can be used as data actuality measures. However, if T, such that 0 ≤ t0 ≤ T, denotes the maximum data validity time then for t ≤ T a difference θ = T - t or a normalized difference Θ = θ / T, both satisfying the above-mentioned conditions, can be used as actuality measures characterizing one of data quality aspects. For similar reasons, if ∆ is a finite length of admissible data values and δ is the length of data confidence interval, where 0 < δ < ∆, then c = 1/ δ or C = ∆ /δ, rather than δ, can be used as data accuracy measures.

Comparison of Multi-Aspect Data

Qualities in a Linear Vector Space

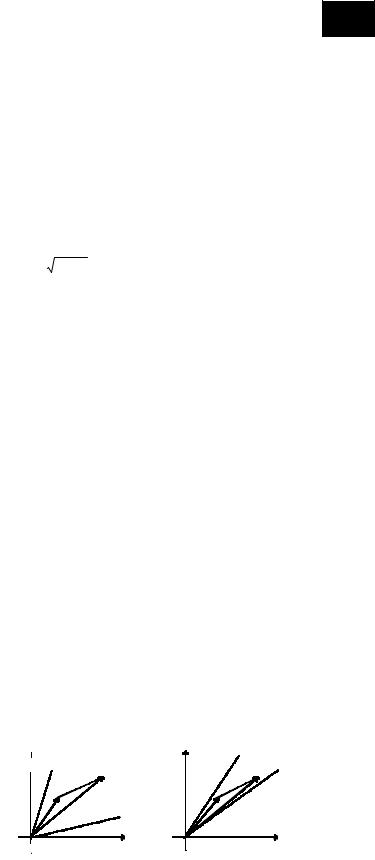

For any pair of vectors Q’, Q” describing multi-aspect qualities of some single data they can be compared according to the semi-ordering rules in the given linear vector space (Akilov & Kukateladze, 1978). Linearity of the vector space admits the operations of vectors adding, multiplying by real numbers and taking the differences of vectors. For comparison of vectors a so-called positive cone K+ in the vector space should be established, as shown in Figure 1. It is assumed that Q’ has lower value than Q” (Q’ F Q”) if their difference ∆Q = Q” - Q’ is a vector belonging to K+. If neither Q’ FQ” nor Q” FQ’ then the vectors Q’,Q” are called mutually incomparable,meaning that no quality vector in this pair with respect to the other one can be preferred. Figure 1a shows a situation of Q’ F Q”, while Figure 1b shows vectors’ incomparability caused by a narrower positive cone (between the dotted lines) being assumed.

The example shows that the criteria of multi-aspect data quality comparability can be changed according to the user’s requirements: They become more rigid when the positive cone K+ established is narrower. In particular, if K+ becomes as narrow as being close to a positive halfaxis, then Q’ FQ” if all components of Q’ are proportional to the corresponding ones of Q” with the coefficient of proportionality <1. On the other hand, if K+ becomes as

large as the positive sector of the vector space, then Q’ FQ” means that all components of Q’ are lower than the corre- D sponding ones of Q” ; in practice the domination of the components of Q” over those of Q’ may be not significant, excepting one or several selected components. Between

the above-mentioned two extremes there is a large variety of data quality vector semi-ordering, including those used in multi-aspect optimization theory. In particular, if Q’ = [q1’, q2’,…, qk’] and Q” = [q1”, q2”,…, qk”] are two vectors, then it can be defined their scalar product:

(Q’, Q”) = q1’× q1” + q2’× q2” + … + qk’× qk”

and the length (a norm) of a vector Q given by the expression:

|| Q ||= (Q,Q)

Then any fixed positive-components vector C of a norm ||C|| = 1 indicates the direction of the K+ cone axis. For any given data quality vector Q, an angle (C, Q) between the vectors C and Q can be calculated from its cosine given by:

cos 4(C, Q) = (C, Q) / ||Q||

This expression also can be used for data quality comparison: For the given pair of quality vectors it is assumed that Q’ F Q” if and only if cos 4 (C, Q’) < cos 4 (C, Q”); i.e., when Q” is closer to C than Q’. This type of vector comparison leads, in fact, to a comparison of weighted linear combinations of data quality factors.

Remarks on Composite Data Quality Assessment

The above-described single data quality assessment principles should be extended to composite data and higherorder data structures. In general, it is not a trivial problem, the higher-order data structures usually being composed of various types of simple data. The quality of a record

Figure 1. Principles of comparison of vectors describing multi-aspect data values

a/ |

Q’ |

K+ |

b/ |

K+ |

q2 |

q2 |

|||

q |

2 |

∆Q |

|

∆Q |

|

|

|

||

|

|

|

|

Q’ |

|

|

Q” |

|

Q” |

|

|

q1 |

|

q1 |

0 |

|

|

0 |

|

117

TEAM LinG

consisting of a linearly ordered set of single data (say, a series of parameters describing the properties of a given physical object) can be defined as a nondecreasing vector function of the component data qualities (Kulikowski, 2002, p. 120).The function can be chosen in various ways. For example, if Q’ and Q” are multi-aspect qualities of two data and q1’, q1” is a pair of their first quality factors, q2’, q2” is a pair of their second quality factors, etc., then the quality of the pair [Q’, Q”] can be defined as:

Q = [min(q1’, q1”), min(q2’, q2”),..., min(qk’, qk”)]

Using other, less rigorous functions, satisfying the general conditions of multi-aspect data quality assessment is also possible. Additional vector components describing composite data quality factors also can be introduced. In particular, they may characterize composite data completeness, irredundancy and/or operability. Similar additional quality factors can be used in higher-order data structures assessment. In distributed databases, increasing attention to data interoperability as an important quality factor is paid (de La Beaujardiere, 2002; Robbins, 2002). Sugawara (2002) states that it may be a solution to provide an integrated view of heterogeneous data sources distributed in many disciplines and also in distant places. For this purpose, standardization of scientific terminology, data standards and metadata standards for document type definition are developed (Lagoze, 2002; Sugawara). Limited quality of data, in general, is not an obstacle in using them for decision making because in recent years various methods of decision making under uncertainty have been developed (Bubnicki, 2002; Kandel & Last & Bunke, 2001). However, as some authors remark (Orr, 1998; Redman, 1998; Ballou & Tayi, 1999), in well-organized databases data quality should be carefully controlled.

FUTURE TRENDS

It can be expected that in the coming years, further progress in data quality assessment and control methods will be achieved. New methods of automatic data quality control based on sophisticated mathematical and/or logical tools and on an artificial intelligence approach will be applied. Some standard algorithms of this type will be included into commercial database management systems. High data quality requirements will be also regulated by international and national data processing standards. A basic role in this domain will be played by worldwide organizations: International Standard Organization (ISO), Committeefor Data in Science and Technology (CODATA), International Federation of Information Processing (IFIP), etc.

Data Quality Assessment

CONCLUSION

In most applications, high data quality is not less important than easy access to data. Data quality is, in general, independent on data volume and closely related to the data users’ needs. Despite the fact that no absolute and universal data quality measure exists, a variety of approaches to data quality definition by many authors has been proposed. However, the proposals of data quality assessment are useful only if the quality factors are measurable, the vectors of quality factors are comparable, and single data quality assessment methods can be extended on higher-order data structures. For this purpose, nontrivial mathematical tools of data quality assessment should be used. They make possible choosing a data quality method that is the most suitable one to any given application domain and to the data users’ requirements.

REFERENCES

Akilov, G. P., & Kukateladze S. S. (1978). Ordered vector spaces (in Russian). Nauka, Novosibirsk.

Baeza-Yates, R., & Ribeiro-Neto B. (1999). Modern information retrieval. Harlow, UK: Addison-Wesley.

Ballou, D. P., & Tayi G. K. (1999). Enhancing data quality in data warehouse environments. Communications of the ACM, 42(1), 73-80.

Beynon-Davies, P. (1998). Information systems development: An introduction to information systems engineering. Basingstoke: Macmillan Press Ltd.

Bongard, M. M. (1960). On the concept of “useful information” (in Russian). Problemy kibernetiki, vol. 4, Moscow.

Brillouin, L. (1956). Science and information theory. New York: Academic Press.

Bubnicki, Z. (2002).Uncertain logics, variables and systems. Berlin, Germany: Springer.

de La Beaujardiere, J. (2002). Interoperability in geospatial Web services. 18th International CODATA Conference: Book of Abstracts, Montreal, Canada (p. 8).

Hartley, R. V. L. (1928). Transmission of information. Bell System Technical Journal, 7(3), 535-563.

Kandel, A., Last, M., & Bunke, H. (2001). Data mining and computational intelligence. Heidelberg, Germany: Physica-Verlag.

Kharkevitsch, A. A. (1960). On information value (in Russian). Problemy kibernetiki, vol. 4, Moscow.

118

TEAM LinG

Data Quality Assessment

Kulikowski, J. L. (1969). Statistical problems of information flow in large-scale control systems. IV Congress of the IFAC, Technical Session 63,Warsaw.

Kulikowski, J. L. (1986). Principles of data usefulness evaluation (in Polish). In Technology and Methods of Distributed Data Processing, Part I (pp. 35-51). Vroclav: Vroclav Technological University,.

Kulikowski, J. L. (2002). Multi-aspect evaluation of data quality in scientific databases. 18th International CODATA Conference: Book of Abstracts, Montreal, Canada (p. 120).

Lagoze, C. (2002). The Open Archives Initiative: A lowbarrier framework for interoperability. 18th International CODATA Conference: Book of Abstracts, Montreal, Canada, 9.

Mohr, P. J., & Taylor, B. N. (2000). Data for the values of the CODATA fundamental constants. 17th International CODATA Conference: Book of Abstracts, Baveno, Italy, 137.

Oberweis, A., & Perc, P. (2000). Information quality in the World Wide Web. 17th International CODATA Conference: Book of Abstracts, Baveno, Italy, 16.

Orr, K. (1998). Data quality and systems theory. Communications of the ACM, 41(2), 66-71.

Piotrowski, J. (1992). Theory of physical and technical measurement. Amsterdam: Elsevier.

Pipino, L. L., Lee, Y. W., & Wang, R. Y. (2002). Data quality assessment. Communications of the ACM, 45(4), 211218.

Redman, T. C. (1998). The impact of poor data quality on the typical enterprise. Communications of the ACM, 41(2), 79-82.

Robbins, R. J. (2002). Integrating bioinformatics data into science: From molecules to biodiversity. 18th International CODATA Conference: Book of Abstracts, Montreal, Canada, 1.

Shanks, G., & Darke, P. (1998). A framework for understanding data quality. Journal of Data Warehousing,

3(3), 46-51.

Shannon, C. E., & Weaver, W. (1949). The mathematical theory of communication. Urbana: University of Illinois Press.

Stratonovitsch, R. L. (1975). Teoria informacii (in Russian). Sovetskoe Radio, Moscow.

Sugawara, H. (2002). Interoperability of biological data resources. 18th International CODATA Conference: Book D of Abstracts, Montreal, Canada, 9.

Wang, R. Y., Storey, V .C., & Firth, C. P. (1995). A framework for analysis of data quality research. IEEE Transactions on Knowledge and Data Engineering, 7(4), 623641.

Wang, R. Y., & Strong, D. (1996). Beyond accuracy: What data quality means to data consumers. Journal of Management Information Systems, 12(4), 5-34.

Wulff, H. R. (1981). Rationel klinik. Munksgaard, Copenhagen.

KEY TERMS

(The symbol → refers to the definitions of other terms defined below.)

Composite Data: Data containing an ordered string of fields describing several attributes (parameters, properties, etc.) of an object.

Data Accuracy: An aspect of numerical (→) data quality: a standard statistical error between a real parameter value and the corresponding value given by the data. Data accuracy is inversely proportional to this error.

Data Actuality: An aspect of (→) data quality consisting in its steadiness despite the natural process of data obsolescence increasing in time.

Data Completeness: Containing by a composite data all components necessary to full description of the states of a considered object or process.

Data Content: The attribute(-s) (state[-s], property[- ies], etc.) of a real or of an assumed abstract world to which the given data record is referred.

Data Credibility: An aspect of (→) data quality: a level of certitude that the (→) data content corresponds to a real object or has been obtained using a proper acquisition method.

Data Irredundancy: The lack of data volume that by data recoding could be removed without information loss.

Data Legibility: An aspect of (→) data quality: a level of data content ability to be interpreted correctly due to the known and well-defined attributes, units, abbreviations, codes, formal terms, etc. used in the data record’s expression.

119

TEAM LinG